Internal AI ≠ Zero Vendor Risk: Why Your Supply Chain Still Matters

Yesterday, at London Tech Week, a fellow executive told me, “We build our own AI, so third‑party risk isn’t a concern.” I understand the sentiment, but it’s dangerously incomplete.

Where “Internal‑Only” Falls Short

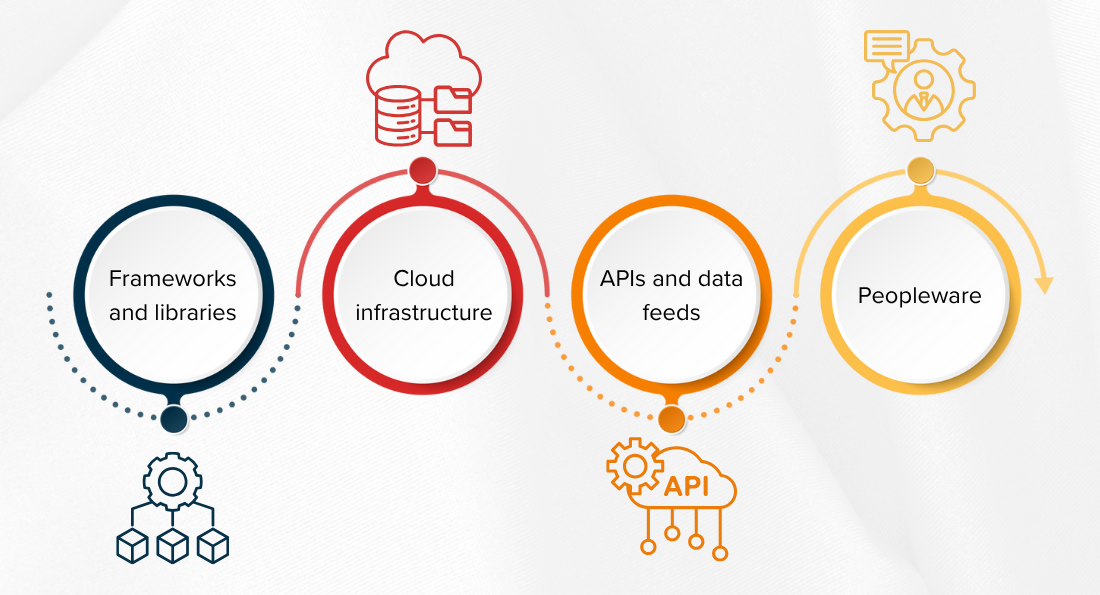

Even if your data scientists code every model from scratch, you still rely on:

- Frameworks and libraries – PyTorch, TensorFlow, Hugging Face. Any hidden vulnerability becomes your vulnerability.

- Cloud infrastructure – GPU instances, managed databases, and object storage. Outages or misconfigurations upstream can expose data.

- APIs and data feeds – payment gateways, KYC providers, market data, perhaps a translation LLM. Each link is a breach point.

- Peopleware – consultants, auditors, offshore teams that touch code or data.

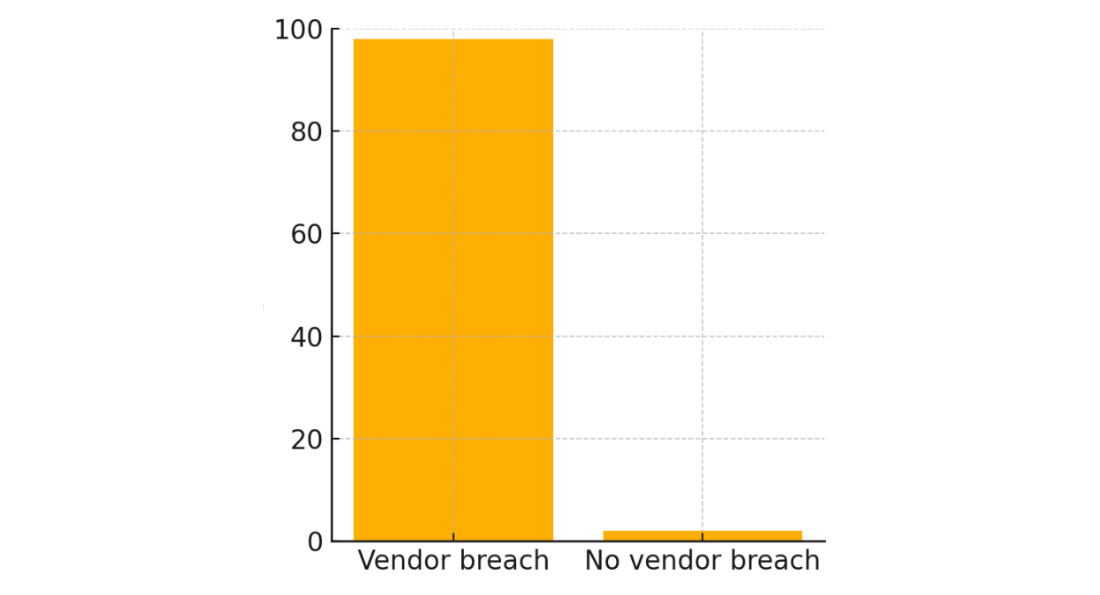

Stats: 30 % of data breaches last year were traced to suppliers. Another study found 98 % of organisations have at least one vendor that has already been breached.

Regulators have taken note: The New York DFS now requires banks to vet all AI service providers as part of their cybersecurity programs.

The Four Hidden Exposures

Below are four blind spots that quietly accumulate risk even when your AI stack is mostly home‑grown:

- Code lineage – Open‑source libraries ship faster than they’re audited; one unpatched dependency can wreck your secure‑dev story.

- Model provenance – You may fine‑tune on external datasets with uncertain licensing or bias controls.

- Operational workflows – CI/CD, logging, and monitoring often run on third‑party SaaS solutions. Credentials stored there are prime targets.

- Regulatory optics – Supervisors review the entire supply chain. The US Treasury’s AI report urges reviews for “higher‑risk generative AI,” whether built or bought.

A Prudent Response

The following figure illustrates the need for a robust process to mitigate this situation: map the ecosystem, score the risk, close the loop, and finally report up and out.

- Map the ecosystem – Treat every service, library, data feed as a vendor—even if it’s free or open source.

- Score the risk – Use a lightweight assessment covering transparency, privacy, compliance, governance, ethics, and monitoring (the six pillars we use in Batoi Insight).

- Close the loop – Feed gaps into an audit workflow tool, such as RegStacker, that assigns owners, tracks evidence, and proves closure.

- Report up and out – Boards and regulators expect dashboard‑level evidence that third‑party AI risk is managed with the same rigour as in‑house models.

Why This Matters Now

AI supply chains are expanding, not shrinking. Model families depend on ever‑larger parameter sets, GPU clouds, and niche data brokers. A blind spot today can translate to contract rewrites tomorrow, at regulator insistence.

Your edge lies not in ignoring vendor risk but in mastering it faster than your peers:

- isibility across internal and external code paths

- Velocity in resolving issues

- Verifiability for auditors and customers

Final Thought

Building AI in‑house is smart. Believing that makes you immune to third‑party risk is not. The winners treat every dependency—library, cloud, or partner—with the same scrutiny they apply to their own models.

Want to see how an assessment‑to‑remediation loop works? Let’s connect.

This article has been published on LinkedIn.

Add your comments and queries

Share feedback, questions, or corrections. We read every message.